image: André Seiti

Year

2018

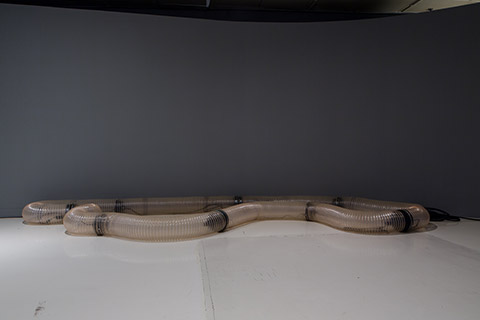

Onde

Originally loosely inspired in the neural networks of our own brain – particularly in our visual cortex – many of the algorithms of artificial intelligence of deep learning in use today have existed for decades, but are only recently seeing a great increase in popularity. This is often attributed to the recent improvements in computational capacity and to the great availability of training data.

However, progress is incontestably stimulated by multibillion dollar investments by firms specialized in mass surveillance – technology companies whose business models depend on psychographic, directed advertising, as well as government organizations focused on the so-called war on terror. They collect a quantity of data beyond their ability to know what to do with it. They desperately need algorithms to process, compress and understand this data – understand text, images and sounds – in order for them to produce executive summaries for our relatively much smaller human minds. Their goal is the automation of the comprehension of big data. However, what does it mean to understand? What does it mean to learn or to see?

The so-called deep neural networks are so named for presenting many layers. Each layer is a nonlinear transformation of high dimensionality; learning to extract resources from the previous layers and to construct a hierarchy of increasingly more abstract representations. Therefore, analyzing data through such a deep neural network is actually a journey through various dimensions and transformations in space and in time. It is also known as the problem of the “inscrutability of the black box of the deep neural networks,” and, amidst the depths of these representations, is the great unknown.

A trained neural network can have a purely “random” white noise;1 embarking on a journey passing through various dimensions and transformations in time and space, and producing what is effectively analogous to a Rorschach blot2 – a more structured noise with a more particular distribution. When we look at the result of this neural network, we complete the process of the creation of meaning, projecting ourselves into it for being machines that yearn for structure and we project meaning onto them. This is what we do. It is what we have always done.3 It is how we survive in nature. It is how we relate to one another. We look at the world around us, we look at the sky and we invent stories, we invent things and believe in them. We seek for regularities and we project meaning onto them based on who we are and what we know.

The image that we see in our conscious mind is not an image mirrored in the outer world, but rather a reconstruction based on our previous beliefs and expectations. Everything that we see, read or hear – even the sentences that I am writing now – constitute what one is trying to understand by establishing a relationship with one’s own past experiences, filtered by one’s past knowledge and beliefs. Perhaps it looks more like an artificial neural network than we would like to admit.

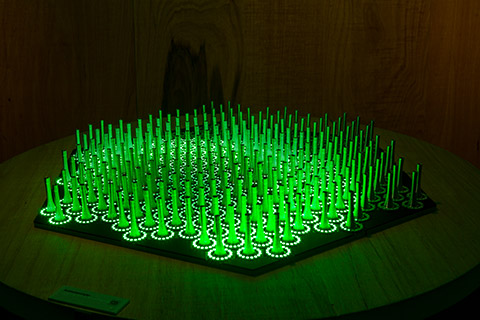

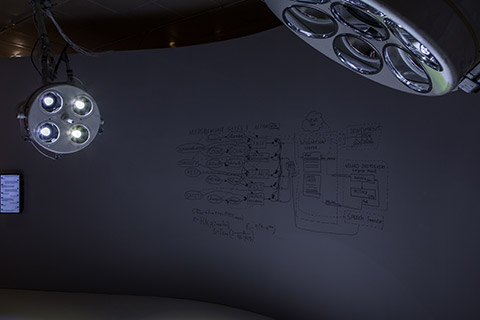

Both Learning to See (2017) and Deep Meditations (2018) are continuous series of works that use cutting-edge machine-learning algorithms as a means to reflect about ourselves and about how we understand the world. The works are part of a larger line of research that analyzes self-affirmative human cognitive trends, our inability to see the world from other people’s points of view and the consequent social polarization.

Learning to See reveals and enlarges the trend in the machine learning systems, demonstrating how previous knowledge and experience of the model – also known as “training data” – completely shape the predictions and the result of the model. On another level, the work also illustrates a significant man-machine interaction for artistic co-creation – something between digital puppets and creative collaboration. An artificial neural network loosely inspired in our own visual cortex looks through the cameras at the world and tries to assign a meaning to what it sees within the context of what it has seen before. There is an attempt to deconstruct and reconstruct the live camera feed through resources and representations that the machine has learned, having been exposed to a comparatively limited “life experience” or “worldview” – in this specific case, “trained” with a large dataset limited to images of waves, fire, clouds and flowers. Later, the work comes across unknown scenes, such as hands, fabrics, wires and keys. Evidently, it can only see what it already knows. Just like us.4

Deep Meditations is a meditation on life, nature, the universe and our subjective experience of this. It is a deep dive into the inner world of an artificial neural network trained in everything – and the controlled exploration of this. There are literally images labeled with “everything” at the Flickr photo sharing site – just as there are images labeled as the world, the universe, art, life, love, faith, ritual, God and much more. On the one hand, the intention is to present a piece for introspection and self-reflection as a mirror for ourselves, our own mind and how we understand the world; on the other, also as a window into the mind of the machine, insofar as it tries to understand its observations in its own computational way. However, there is no clear separation between the mirror and the window. It is impossible to separate the two, since the act of looking through this window projects us through it. When we return our gaze to the slowly evolving meditative images brought to mind by the neural network bordering between abstract and representational, we project ourselves back onto them, we construct their meaning, we invent stories, because we see things not as they are, but as we are.5

1 <https://commons.wikimedia.org/wiki/File:White-noise-mv255-240x180.png>

2<https://commons.wikimedia.org/wiki/File:Rorschach_blot_01.jpg>

3 <https://commons.wikimedia.org/wiki/File:SGR_1806-20_108685main_SRB1806_20rev2.jpg>

4

<http://www.memo.tv/wpmemo/wp-content/uploads/2017/07/msa_gloomysunday_003.jpg>

<http://www.memo.tv/wpmemo/wp-content/uploads/2017/07/msa_gloomysunday_006.jpg>

5

<http://www.memo.tv/wpmemo/wp-content/uploads/2018/07/deepmeditations_fakes008421-16.jpg>

<http://www.memo.tv/wpmemo/wp-content/uploads/2018/07/deepmeditations_fakes009402-18.jpg>

<http://www.memo.tv/wpmemo/wp-content/uploads/2018/07/deepmeditations_fakes009422-22.jpg>

<http://www.memo.tv/wpmemo/wp-content/uploads/2018/07/deepmeditations_fakes009884-17.jpg>

<http://www.memo.tv/wpmemo/wp-content/uploads/2018/07/deepmeditations_fakes010105-16.jpg>

<http://www.memo.tv/wpmemo/wp-content/uploads/2018/07/deepmeditations_fakes010115-2.jpg>

<http://www.memo.tv/wpmemo/wp-content/uploads/2018/07/deepmeditations_msa_deepmeditations_000.jpg>