image: André Seiti

in partnership with Itaú Cultural

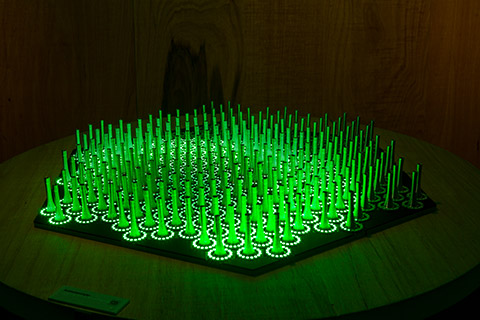

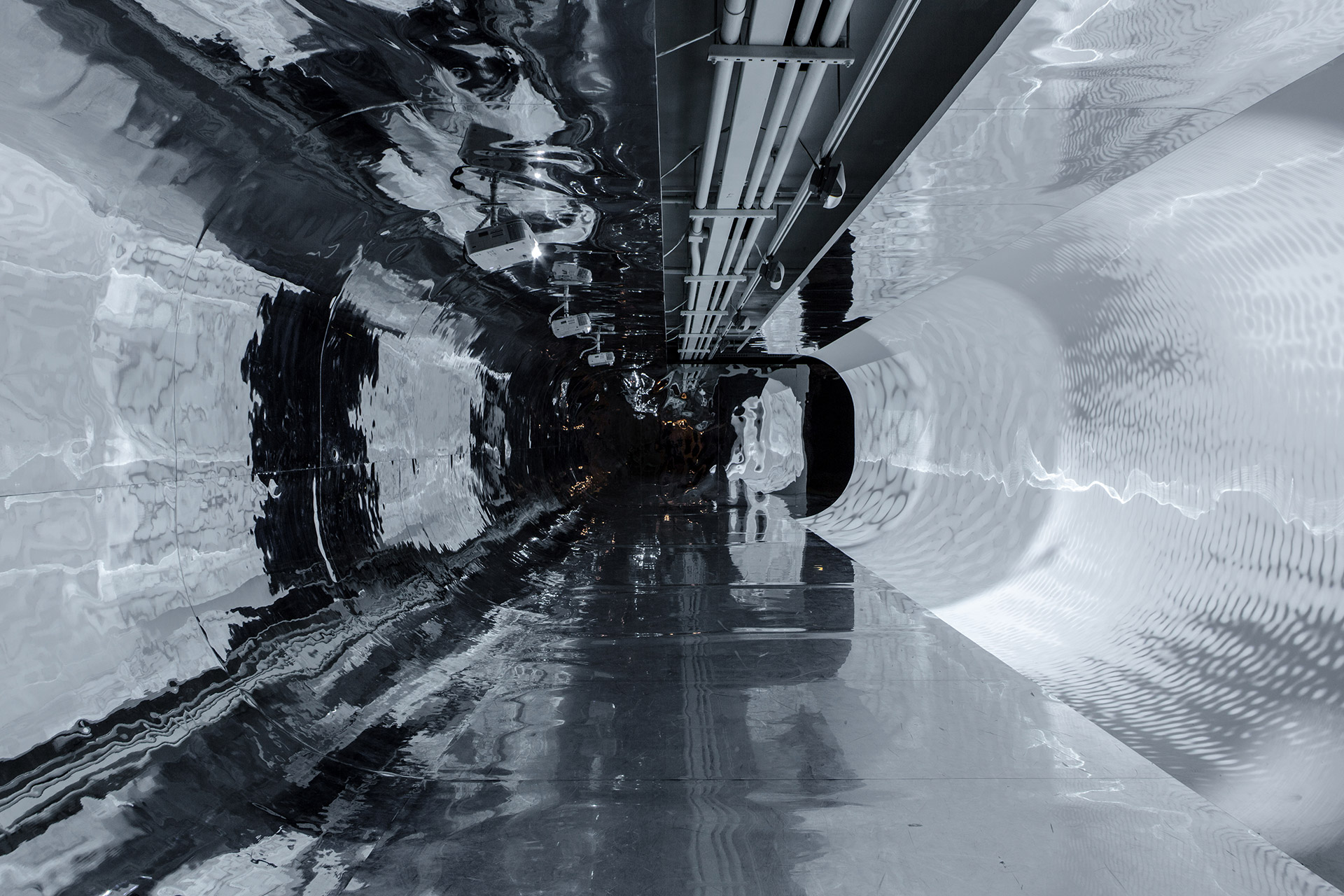

Quantum is an interactive and immersive installation. A sort of quantum simulator that utilizes the rectangular architecture of the second basement in Itaú Cultural to make visitors experience the feeling of penetrating a fluid, subatomic universe, of multiple times and horizons.

In the installation, the presence of visitors triggers changes in the system’s state, on the projection screens and in the sound dimension. As you enter the installation, the system outlines your shadow, and this one, unlike the one you usually have, behaves according to quantum mechanics.

Quantum mechanics as a theory is notoriously hard to understand. Names such as Einstein, Bohr and Feynman have admitted to being puzzled by its quirks. In 1981, Richard Feynman described the issue in a memorable way: "Nature is not classical, dammit, and if you want to make a simulation of nature, you’d better make it quantum mechanical, and by golly it’s a wonderful problem, because it does not look so easy."1

After Feynman’s seminal "Simulating Physics with Computers"2 lecture in 1981, countless studies and physical implementations of quantum simulation have been conducted. In other words, despite its difficulty, it did become popular. In the scientific literature, it is easy to find articles describing either implemented or projected simulators to study superconducting circuits, quantum atomic gases, trapped ion ensembles and photonic systems, amongst many others.

What these research platforms have in common is solving complex problems requiring extraordinary computing capacity. Amidst the possibilities, the research and development of technology such as quantum computers and communication systems that bear the promise of unthinkable performance – nothing like their classical counterparts – are highlights.

Future substitutes for silicon

Information is physical. Digital electronic computers process information by transforming on/off signals (bits) into complex symbols. From the physical-logical point of view, the main component in any digital computer is the switch or circuits that, interacting with the memory, transform an input state into an output state, through control of the electrical current (on/off). The number of bits that an electronic computer can process in a given period of time determines its capacity for problem solving, or computing.

For the last few decades, each new hardware generation has presented the following characteristics: massive increases in calculation capacity and speed, striking reductions in the physical dimensions of devices, and vertiginous drops in prices. What appears to rule this pattern is Moore’s law. In 1965, Intel co-founder Gordon Moore predicted that the number of circuits printed in a single microchip would double every eighteen months.

What is behind Moore’s law is not a law of physics. It is, in fact, a tendency of technological evolution that has made it possible for engineers to produce progressively smaller transistors. When a transistor's size is reduced, the flow of electrons has a smaller path to cross, enabling a higher speed of bit processing. The smaller size also allows for the creation of integrated circuits with a larger number of connected transistors, furthering the depth of computing. With smaller transistors and better circuit integration, computers have evolved: their calculation capacity has doubled even while they have become smaller and less expensive.

The quality of the materials involved in the production of microchips foretells, however, that there are physical limitations for Moore’s law. The smaller a circuit gets, the lower the possibility is that the law will be sustained. “Eventually [ca. 2020, according to specialists] transistors will become so tiny that their silicon components will approach the size of molecules. At these incredibly tiny distances, the bizarre rules of quantum mechanics take over, permitting electrons to jump from one place to another without passing through the space between. Like water from a leaky fire hose, electrons will spurt across atom-size wires and insulators, causing fatal short circuits.”3

Moore’s Law started to sound like a warning: when circuits reach atomic dimensions and the undesired quantum effects manifest themselves, what will happen? Would engineers enter the quantum realm?

Answers to these questions are starting to be formulated.

Digital, electronic and quantic

In the attempt to divorce computational process from silicon, new theoretical approaches suggest possible future environments. The exotic designs imagined include optical computers,4 molecular computers,5 DNA computers,6 biocomputers7 and quantum computers.

Quantum computers utilize the intrinsic spin of subatomic particles to codify information. In some ways akin to how electronic computers codify information in bits (binary values 1 or 0) associated to the electric tension of transistors (on or off), quantum computers codify data in qubits (quantum bits). Qubits are essentially representations of subatomic particles that occupy two different states, which can similarly be designated 1 or 0. However, unlike a bit, governed by the binary distinction between 1 and 0, the qubit can be both 1 and 0 simultaneously.

According to researcher Isaac Chuang, this ambiguous quality of the qubit – in fact, a property inherent to quantum mechanics – has powerful consequences, easily demonstrated when thinking of more than one qubit. “These qubits could simultaneously exist as a combination of all possible two-bit numbers: (00), (01), (10) and (11). Add a third qubit and you could have a combination of all possible three-bit numbers: (000), (001), (010), (011), (100), (101), (110) and (111). […] Line up a mere 40 qubits, and you could represent every binary number from zero to more than a trillion – simultaneously.”8

The question is: how to implement these computers? In 1996, after two attempts of building a quantum computer – one theoretical, made by Peter Shor,9 and one practical, made by quantum mechanist Seth Lloyd – Isaac Chuang and Neil Gershenfeld, from MIT Media Lab, envisioned a way.10 First, they decided to work with neutrons and protons, since these are naturally shielded from external disturbances by their surrounding cloud of electrons. Then, they utilized nuclear magnetic resonance (NMR), an already quite well-developed technology used commercially for scanning the human body, to manipulate the spin movement of the subatomic particles.

According to the authors’ formula: “Say you want to carry out a logical operation using chloroform – something like, ‘if carbon is 1, then hydrogen is 0.’ You just suspend the chloroform molecules in a solvent, and put a sample in the spectrometer’s main magnetic field to line up the nuclear spins. Then you hit the sample with a brief radio-frequency pulse at just the right frequency. The hydrogen spin will either flip or not flip, depending on what the carbon is doing – exactly what you want for an if-then operation. By hitting the sample with an appropriately timed sequence of such pulses, moreover, you can carry out an entire quantum algorithm – without ever once having to peek at the nuclear spins and ruin the quantum coherence.”11

Since 1996, countless proposals, mechanisms and architectures have been tested: electrons in electric fields; nuclear spin (both clockwise and counter-clockwise); atoms in their fundamental and excited states; horizontally and vertically polarized photons, amongst others.

Regardless of the architecture, the main advantage of quantum computers over their classic counterpart is the ability to perform many tasks at once. There is, moreover, a clear principle: the bigger the number of qubits, the more powerful the calculation. The bigger the processing capacity, the bigger the capacity of simulating more complex quantum systems. The better the simulator, the better the capacity of science to reveal our nature, considering Feynman’s suggestion.

The first systems with two qubits were developed in 1996. Today, in 2019, there are already systems with dozens of qubits. Within the next five to ten years, Seth Lloyd predicts, quantum computer evolution will go from 50 qubits to 5,000 qubits, initially operating as specialized systems and, eventually, as general use computers.12

The overcoming of the physical limits provided by Moore’s law and the extraordinary computational capacity are considerable results obtained by the research. However, according to Lloyd, the most interesting is yet to come. In the words of the professor of Physics and Mechanical Engineering at MIT, these new machines point towards a new stage in machine evolution: “Even with small quantum computers we will be able to expand the capability of machine learning by sifting vast collections of data to detect patterns and move on from supervised-learning (“That squiggle is a 7”) toward unsupervised-learning – systems that learn to learn.”13

“The universe is a quantum computer. Biological life is all about extracting meaningful information from a sea of bits. For instance, photosynthesis uses quantum mechanics in a very sophisticated way to increase its efficiency. Human life is expanding on what life has always been – an exercise in machine learning.”14

Quantum and Other Quantum Horizons

Science, art and technology utilize different methods to research, develop, test, evaluate and communicate theories.

The exhibition and seminar Consciência Cibernética [?] Horizonte Quântico (Cybernetic Consciousness [?] Quantum Horizon) – a large research promoted by the staff of Itaú Cultural – and the installation Quantum were designed as possible methods.

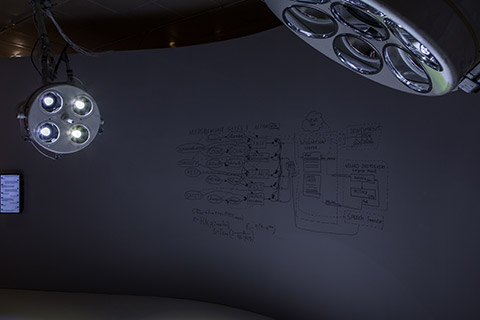

In 2018, I was invited to join Itaú Cultural’s research team for the exhibition. That work resulted in a new invitation. This time, I was tasked with building a sort of quantum simulator, that is to say, developing an artifact capable of making visitors experience the feeling of penetrating a subatomic universe, ruled by quantum mechanics.

Architecture

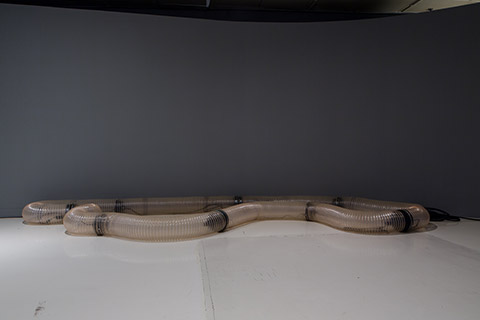

Quantum is an immersive and interactive installation consisting of an optical device, computers, customized software, infrared cameras and an audio system.

The device is architectonic in scale. Eight wooden modules, aligned and connected to each other, form an ellipsoidal tubular structure measuring 4.15 meters wide, 2.27 meters tall and 15.24 meters long.

Inside the tube, the ceiling, floor and one side are mirrored, while the wall on the other side works as a projection screen, showing computer-generated images. The mirrors reflect the computer images and the users' interactions.

Interaction rules

Inside the tube, cameras capture the image of the visitors. The system interprets that image and turns it into a silhouette. Each silhouette is then treated as a single microscopic particle. Each particle possesses the probability of being in a given time and a given space.

The system is constantly evolving and is capable of simultaneously simulating a range of silhouettes in the present, past and future. For instance, it can simulate a silhouette derived directly from interactions of a first visitor, V1, or it can simulate V1 and V2, just as it can simulate V1+V2. And many more silhouettes, with many degrees of freedom on time and space. Imagine, if you can, an array of silhouettes moving. Imagine they all have the possibility of being in multiple states and times.

The same happens with the sound

Inside the tube, microphones capture the ambient sound and this information is transformed into sound units that possess the ability to be in one or more places at the same time. And that’s not all.

Two or more particles (visual silhouettes and/or sound units) can become entangled. Quantum entanglement is another property that collections of particles may have. In Quantum, entangled particles and silhouettes act like synchronized dancers with unpredictable, yet coordinated, movements.

What shall we do?

Among physicists, the consensus is to credit quantum mechanics as the best model to describe the miniscule reality. And if the model is invisible, counterintuitive and strange, for now, all we have are Richard Feynman’s suggestions:

1. To build devices that use other more familiar and controllable systems to simulate the laws of quantum systems;

2. To study and understand what quantum physicists and mechanists are talking about.

Luckily, in this path, we have many guides.

In "There’s Plenty of Room at the Bottom," a lecture renowned as one of the main inspirations behind nanotechnologies, Feynman predicts what we can expect of the microscopic world: “When we get to the very, very small world we have a lot of new things that would happen that represent completely new opportunities for design. Atoms on a small scale behave like nothing on a large scale, for they satisfy the laws of quantum mechanics. So, as we go down and fiddle around with the atoms down there, we are working with different laws, and we can expect to do different things.”

Safe travels!

1 FEYNMAN, Richard P. "Simulating physics with computers." International Journal of Theoretical Physics, v. 21, n. 6/7, p. 486, 1982.

2 Available at: <https://people.eecs.berkeley.edu/~christos/classics/Feynman.pdf>. Retrieved on: Mar 29, 2019.

3 KAKU, Michio. “What will replace silicon?” Time Magazine, Jun 19, 2000, p. 62.

4 Optical computers utilize photons (light particles), in place of electrons, to transport data. Photons have an advantage over the previous version: they can overlap without interference, allowing the breaching of the two-dimensional chip barrier. Moreover, a laser cannon can produce up to billions of photon chains, each of them processing calculations “in parallel,” that is, independently.

5 Molecular computers utilize small organic molecules instead of electronic switches. Synthesized in laboratories, these molecules have properties that allow them to act like electronic components, that is to say, switching the current on or off. The advantage to this technology lies in the fact that the molecules are potentially easy and cheap to manufacture. Apart from that, unlike silicon components, these molecules are ultra-small, making the production of a chip with billions of switches and components possible. According to this logic, researchers affirm that future molecular memories will be able to hold a million times more than the current best chip semiconductor. This would allow lifetime experiences to be stored in gadgets that would be sufficiently cheap and small to be incorporated to clothing. One disadvantage to consider is the fact that the molecular devices synthesized in chemical tanks are still prone to defects.

6 Another approach to molecular computing is to turn DNA molecules into computational devices. The idea is to use the double helix of the DNA to codify a sequence of data: instead of a binary code (1 or 0), utilized in classical computing, the four nitrogenous bases (A, T, C and G) would be used.

7 Amongst the other projects, the biocomputational approach is perhaps the most exotic. From a physical-logic standpoint, the “circuitry” of a biocomputer is built from two genes. These genes are able to be mutually antagonistic, in other words, when one of them is active, the other becomes inactive and vice-versa. In addition, this mechanism (known as “flipflop”) also produces visual information. When exposed to a laser beam, one of the genes creates a fluorescent protein, allowing an observer to detect when a cell shifts between states. The idea here is to program the biological switch, that is, change the states by making one active gene inactive. This can be achieved by external means, such as the introduction of chemical substances or temperature changes in the environment. In an interview to the MIT Technology Review, one of the leaders in this approach, Tom Knight, when analyzing the future that biocomputational research might see, estimates that, in the near future, these biological machines will be used in various endeavors. These include automatized insulin injection, “smart” curatives able to analyze wounds and automatically treat them; and even a biochip (a hybrid cell-electronic circuit) able to detect food poisoning and other toxins. To Knight and other researchers, the biocomputer should be understood less as an attempt to substitute the electronic computer and more as an interface or device capable of manipulating biochemical systems and interconnecting informational technology to biotechnology.

8 WALDROP, Mitchell M. “Quantum Computing.” MIT Technology Review. v. 103, n. 3, p. 62., May–Jun, 2000. Special edition.

9 Applied Mathematics Professor at MIT, in 1994, Peter Shor created a quantum algorithm capable of calculating prime factors of large numbers. In 2016, MIT and Innsbruck University (Austria) researchers reported in an article published in Science magazine that they had designed and built a quantum computer to run Shor’s algorithm.

10 GERSHENFELD, Neil; CHUANG, Isaac. “Calcul quantique avec des molecules.” Pour la Science, v. 250, Aug. 1998, pp. 60–65.

11 WALDROP, Mitchell M. "Quantum Computing." MIT Technology Review. v. 103, p. 64. n. 3, May–Jun, 2000.

12 D-Wave, the first company committed to developing quantum computers for research institutions such as Google, NASA and Intel, was created in 1999. IBM has been building and providing prototypes to researchers around the world since 2016. The first quantum computer to be commercially available for general use, the Q System One, was announced by IBM in 2019.

13 Available at: <http://longnow.org/seminars/02016/aug/09/quantum-computer-reality/>. Retrieved on: Oct 14, 2019.

14 Available at: <http://longnow.org/seminars/02016/aug/09/quantum-computer-reality/>. Retrieved on: Mar 29, 2019.